The internet is hurtling towards a decentralized, user-controlled future – Web3. Businesses must adapt alongside this constant technological evolution. By embracing curiosity and exploring innovative solutions, they can discover unbiased ways to tackle challenges. This diversity fuels groundbreaking ideas and superior outcomes. Building in this new landscape demands a fresh architectural perspective. This article dives into the essential components and considerations for designing a secure Web3 application. We’ll explore the design process through a case study – a hypothetical platform for trading and tracking aviation parts.

The Web3 dApp Development Journey

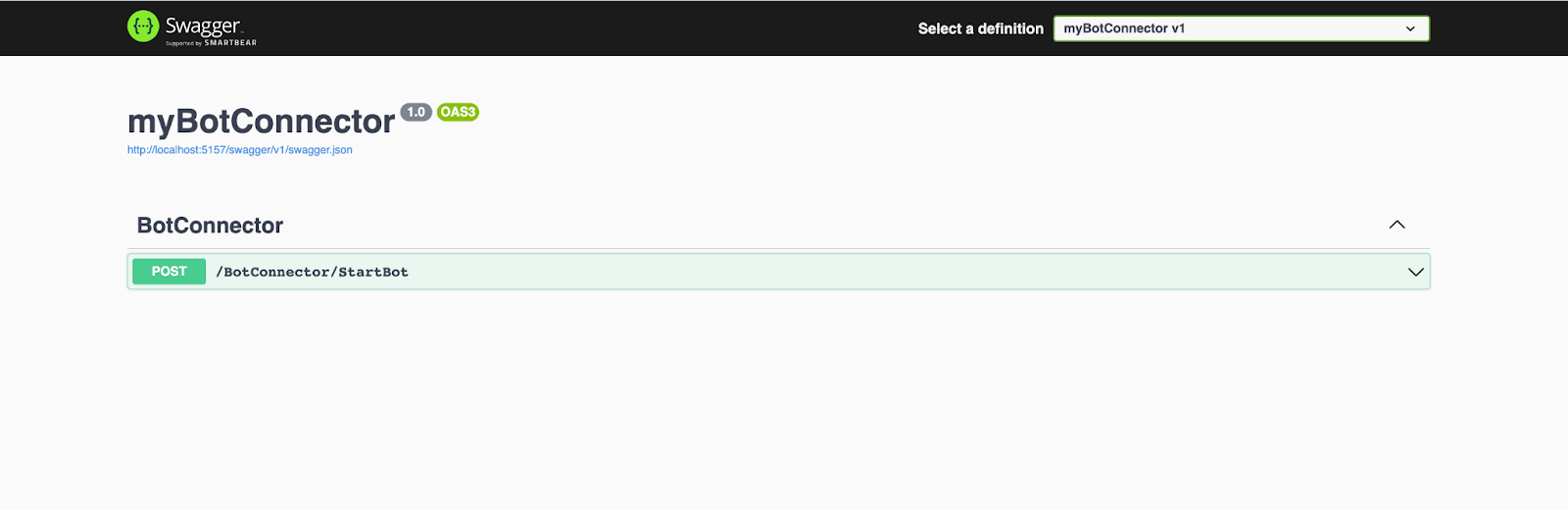

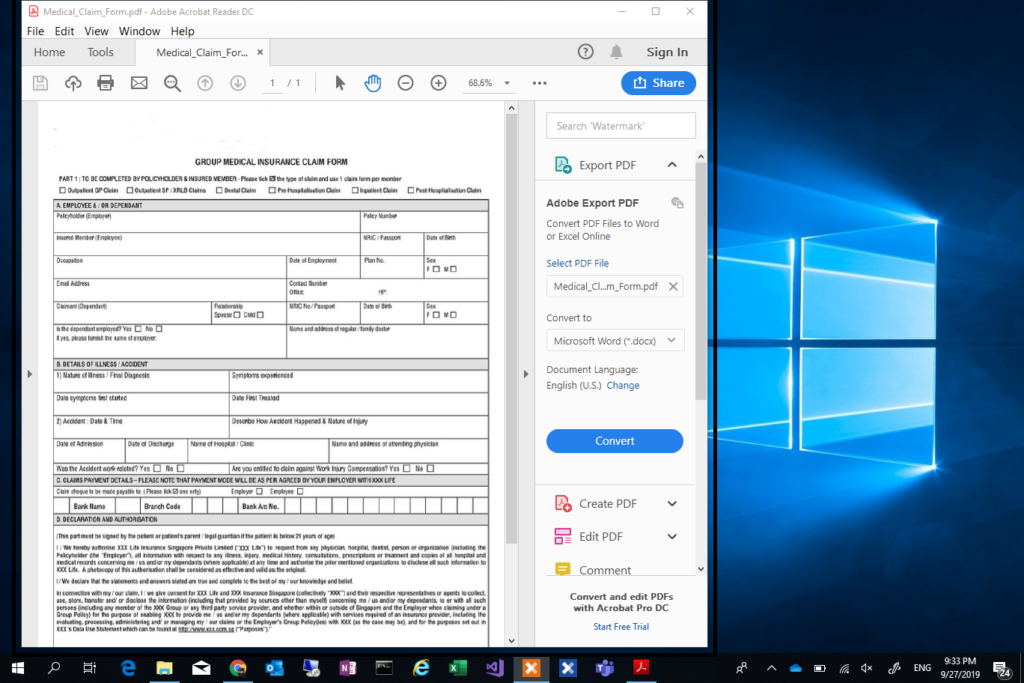

Before diving into the specifics of architecture design, it’s crucial to establish a holistic understanding of the Web3 development process. This journey starts with grasping the fundamentals of Web3 solutions and technologies, and culminates in architecting enterprise-grade Web3 solutions. The following diagram outlines the essential knowledge you’ll need on this path.

By revisiting the foundational Web3 development process, we can solidify our understanding of the key design components that will be crucial for optimizing your Web3 application’s architecture. Let’s delve into some essential terms and how they work together:

- NFTs (Non-Fungible Tokens): Imagine unique digital certificates stored on a blockchain. These can represent ownership of digital assets like artwork, collectibles, even in-game items. Each NFT is one-of-a-kind and irreplaceable.

- Custom Data Objects: Think of these as pieces of information created by smart contracts and stored directly on the blockchain. They can be simple text or numbers, or even complex data structures. Smart contracts have full control over creating and managing these data objects.

- On-chain Data/Code: This forms the core of a blockchain. Data and code stored on-chain are permanent and publicly accessible on every computer (node) in the network. This guarantees transparency, security, and immutability (unchangeable nature) of the information.

- Off-chain Data/Code: While not directly on the blockchain, off-chain data and code still play a vital role in blockchain applications. Unlike on-chain data, they’re not permanently stored on every node and aren’t entirely publicly accessible.

Relationships and Interactions:

These components work together to build a robust Web3 application. Here’s a glimpse of how they interact:

- Smart Contracts: These are self-executing programs on blockchain. They can utilize on-chain data and code (like NFTs and custom data objects) to define rules and automate processes within your application.

- Web3 Wallets: These act as digital vaults for users to store their cryptocurrency and interact with dApps (decentralized applications) built on blockchains. Users might need a Web3 wallet to interact with your application, perhaps to buy or trade NFTs.

- Self-Sovereign Identity (SSI): This emerging concept empowers users to control their own digital identity data. Imagine a future where users can prove their identity to your application using an SSI solution, without relying on centralized authorities.

By understanding these core components and their relationships, you’ll be well-equipped to make informed decisions about on-chain vs. off-chain data storage, and ultimately design an optimized architecture for your Web3 application.

Aviation Parts Trading and Tracking Platform

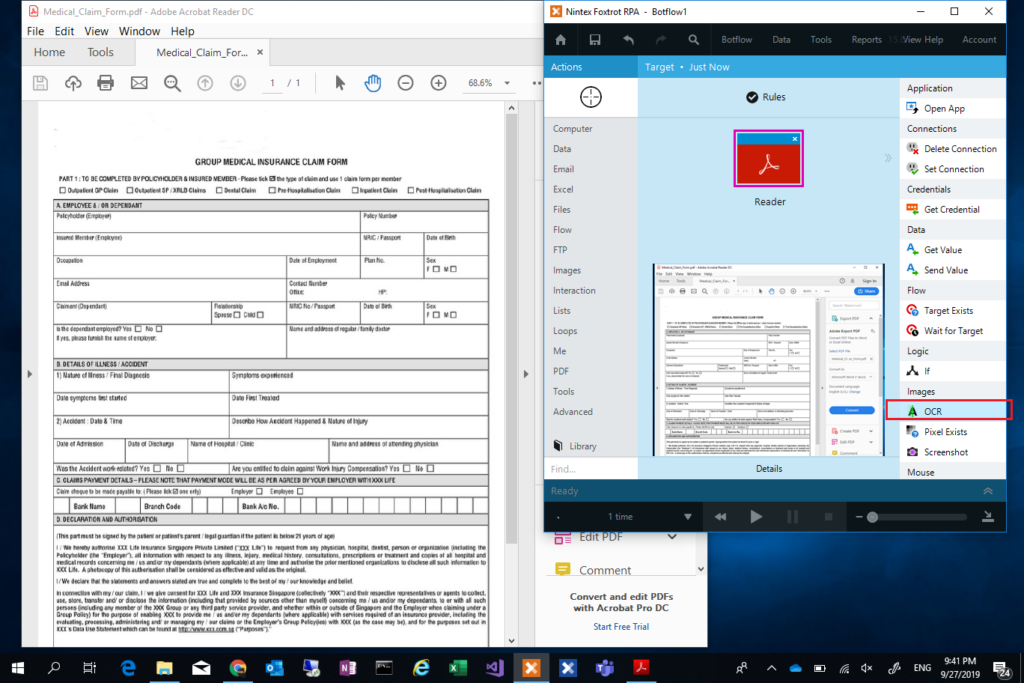

Let’s delve into aviation parts trading and tracking use cases. We’ve identified key challenges and how blockchain addresses them. Here’s the data flow outlining the process:

Data Flow

- Seller lists a part: Uploads details like part number, condition, certifications, and asking price to the listing platform. This information is then reflected in the smart contract.

- Buyer finds a part: Searches the listing platform and identifies a desired part.

- Negotiation (optional): Buyer and seller may negotiate price and terms off-chain.

- Purchase agreement: Buyer interacts with the smart contract, locking in the agreed price (crypto or traditional currency converted at purchase).

- Regulatory Check (optional): For safety-critical parts, the system might interact with a regulatory compliance system to verify the part meets airworthiness standards.

- Payment Processing: The payment gateway facilitates the secure transfer of funds from buyer to seller.

- Ownership Transfer: Upon successful payment, the smart contract automatically updates ownership of the part in the blockchain ledger.

- Shipping and Logistics: The buyer and seller arrange physical delivery of the part outside the blockchain system.

Design Considerations for Aviation Parts Platform

To create an effective platform, we need to consider these key factors:

- Target Audience: Identify the primary users (e.g., airlines, parts manufacturers, maintenance providers). Understanding their needs shapes platform functionalities.

- User Journey: Consider how users will interact with the platform. This includes finding parts, listing/selling parts, and managing their accounts.

- User Onboarding: Define how users will register and verify their identities on the platform, ensuring a secure and trustworthy environment.

- Data Model: Identify the core data components needed to track and manage aviation parts effectively. This might include:

- Parts/Assets: Information like part number, manufacturer, condition, service history, and current location.

- Companies: Data on airlines, parts suppliers, maintenance providers involved in the ecosystem.

- Transactions: Records of part purchases, ownership transfers, and service events.

Data Storage: Smart Contracts vs. NFTs

While both blockchain technologies have their merits, data objects created by smart contracts are better suited for aviation parts tracking compared to NFTs. Here’s why:

- Data Integrity: Smart contract data objects can store detailed maintenance history, manufacturing data, and location information for each part, promoting transparency and informed decision-making.

- Tracking Multiple Parts: Unlike NFTs, designed for unique ownership, data objects efficiently track multiple parts of the same type, which is typical for aviation parts.

- Smart Contract Automation: Smart contracts can automate tasks based on defined rules. For example, they can trigger maintenance alerts when a part reaches its service life.

By focusing on these considerations and leveraging the strengths of smart contracts, we can design a platform that simplifies aviation parts management, enhances transparency within the supply chain, and improves overall operational efficiency.

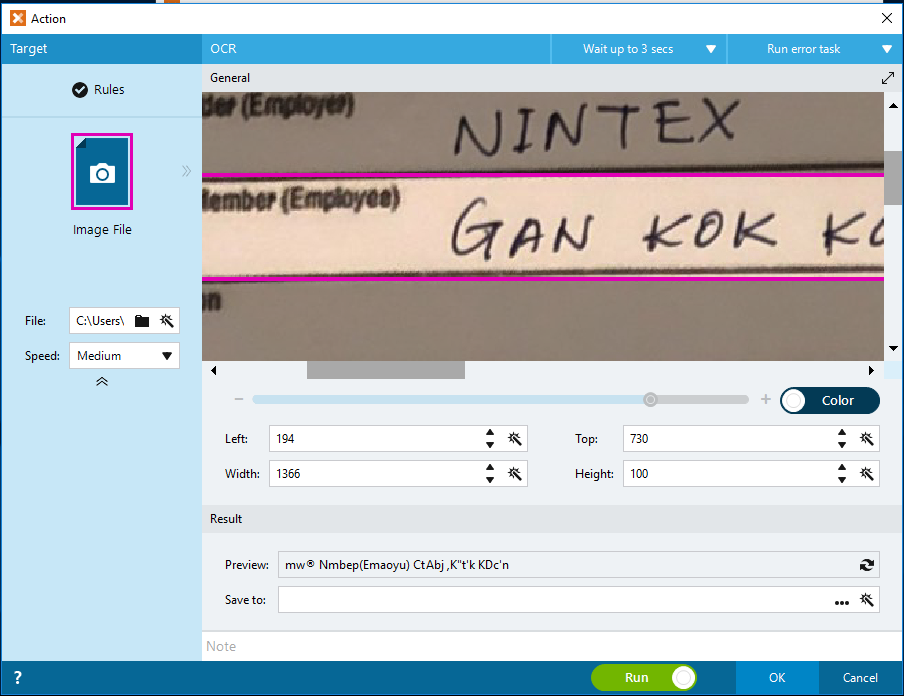

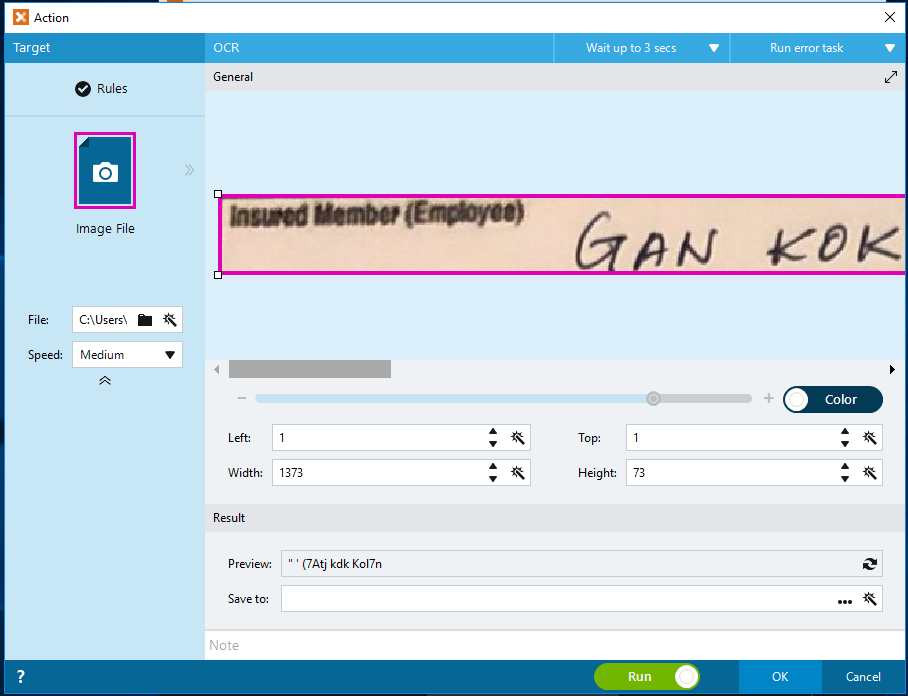

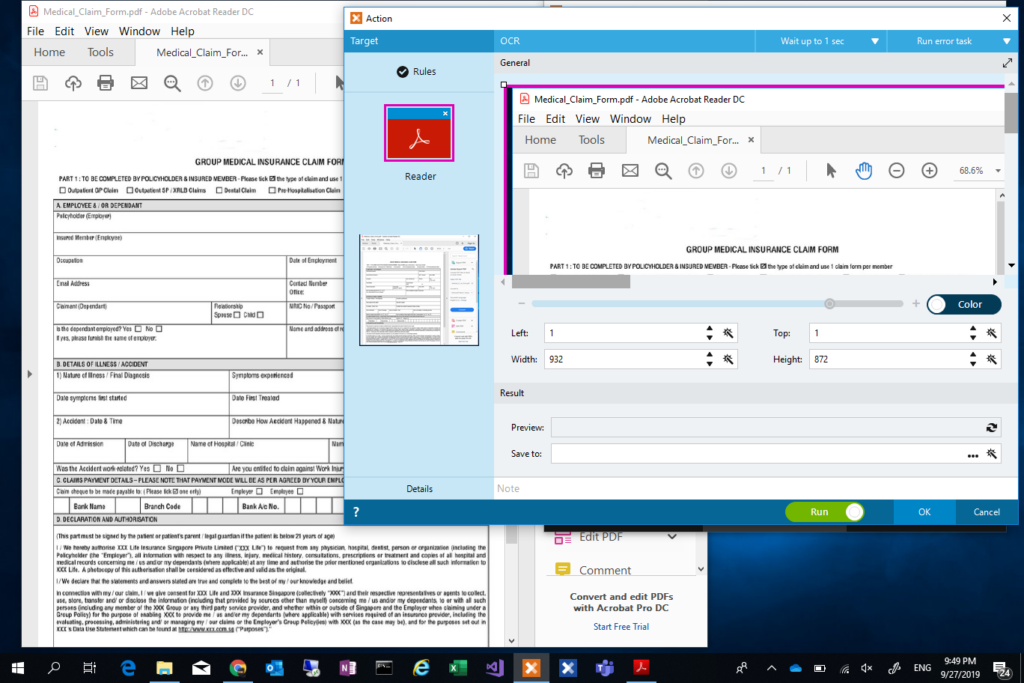

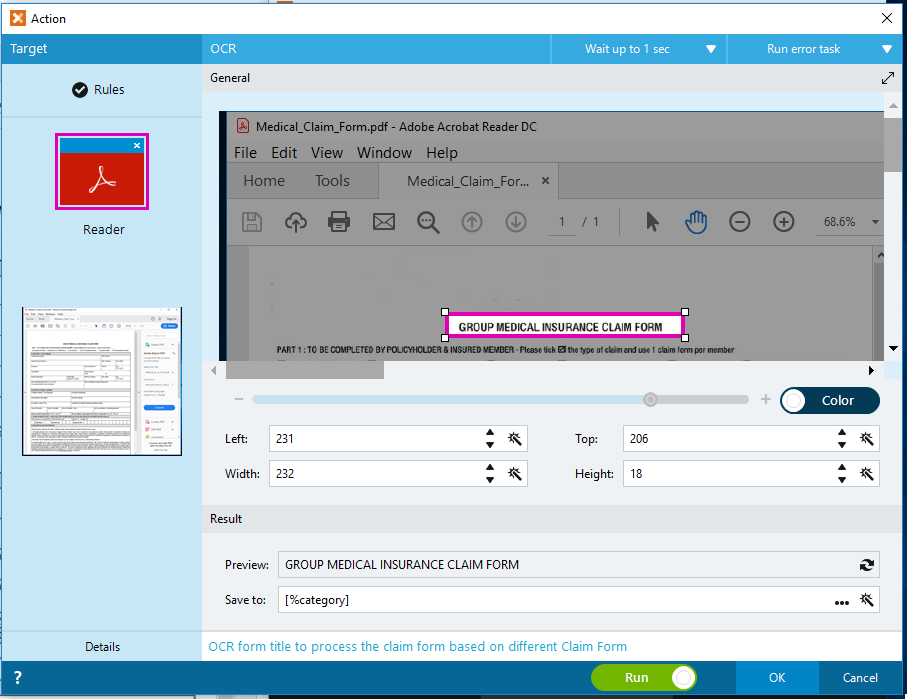

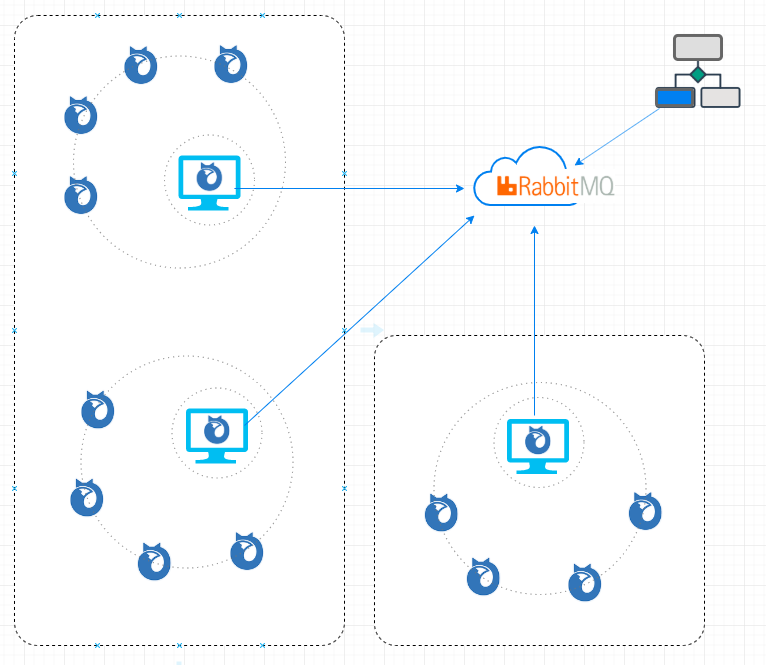

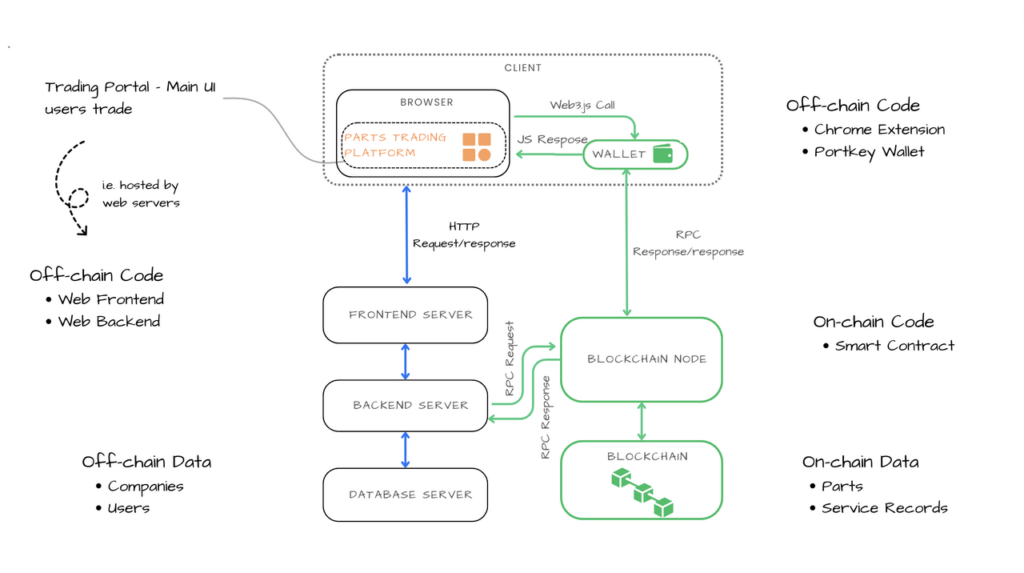

Visualizing Architecture

Leveraging the design considerations, we can now visualize the platform’s architecture through a detailed diagram. This diagram illustrates the key components, their interactions, and data flow.

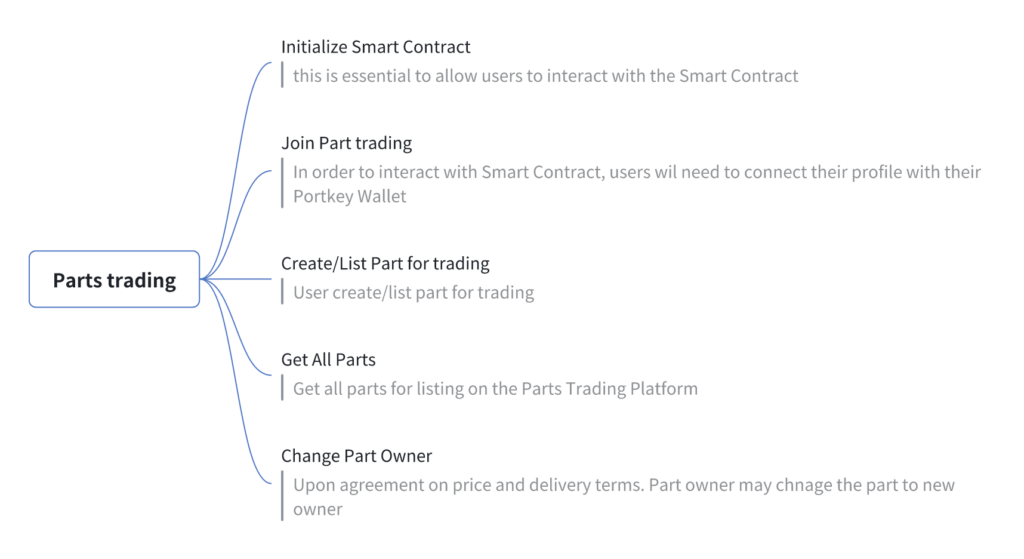

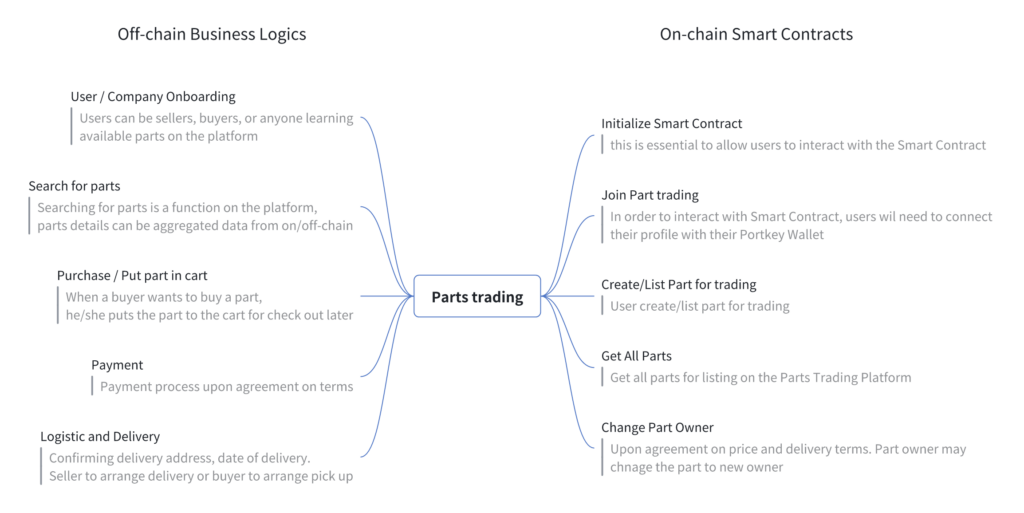

Smart Contract Functionality

Smart contracts will be the engine powering core functionalities and ensure secure and transparent transactions within the aviation parts ecosystem:

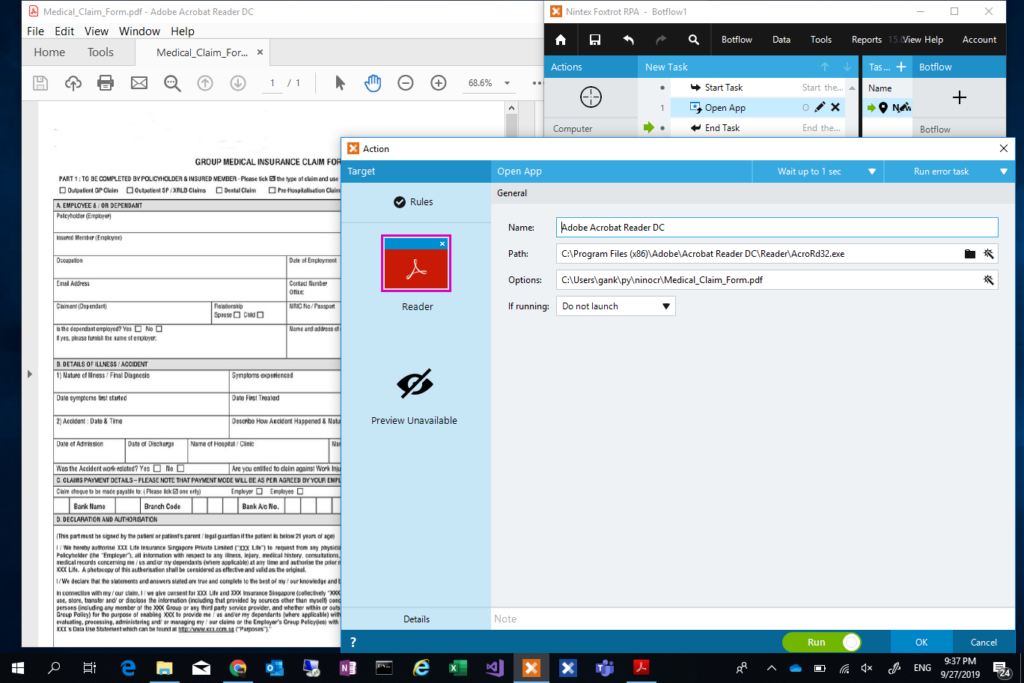

Off-Chain Considerations

While this document focuses on the core on-chain architecture, it’s important to acknowledge the crucial role of off-chain components in a complete solution. These components may include functionalities like:

- User and company onboarding processes

- Search for parts

- Purchase part

- Secure payment gateways

- Logistics and delivery arrangements

- User interfaces and applications

Integrating these off-chain elements seamlessly with the on-chain infrastructure is essential for delivering a user-friendly and comprehensive platform.

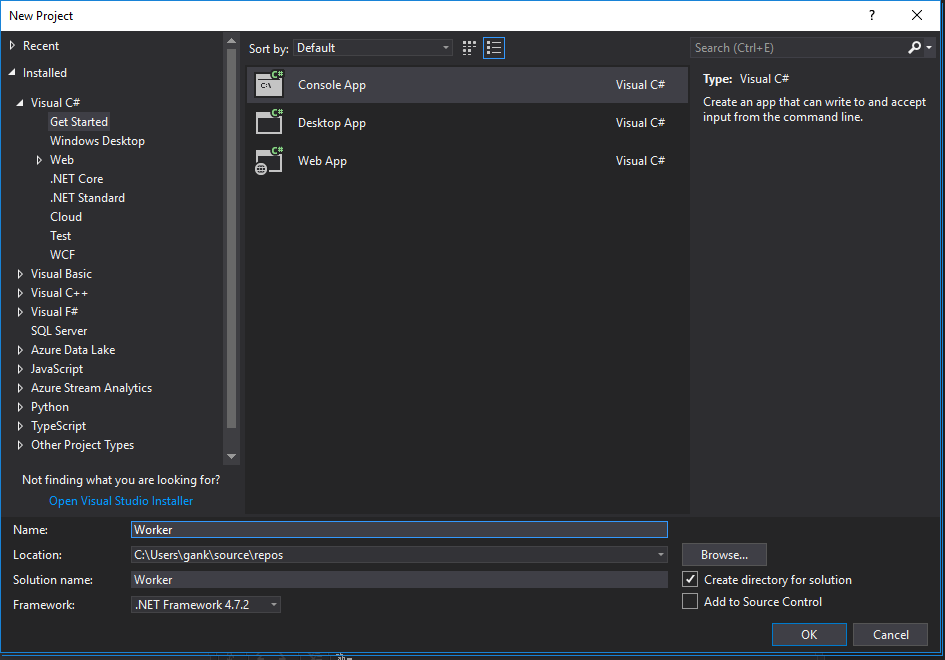

Next Steps: Building the Platform

With a clear design in place, the next steps involve:

- Proof of Concept (PoC): Develop a basic version to validate the core functionalities and gather user feedback.

- Prototype/MVP: Build a functional prototype or Minimum Viable Product (MVP) to further refine the platform based on user testing and real-world scenarios.

This structured approach ensures we move forward with a well-defined plan and a clear understanding of the development roadmap.

Conclusion

Web3 architecture is a new frontier, but with careful planning and the right approach, you can build secure, scalable, and future-proof applications. By understanding the core layers, designing for decentralization, prioritizing performance, and creating a smooth developer experience, you can be a part of shaping the next generation of the internet.